At its simplest, the digital twin (DT) is a dynamic digital representation of a physical piece of equipment, or thing, together with its associated environment. Every DT has a dynamic data model containing a number of data attributes of the physical thing or system it represents. Attributes are associated with sensors that measure temperature, pressure, and other variables and associated physics in order to represent real world operating conditions as well as static values like the installation date or original equipment manufacturer (OEM).

A DT can also consist of multiple nested twins that provide narrower or wider views across equipment and assets based on the process or use case. For example, a complex asset like an oil refinery can have a DT for a compressor motor, the compressor, the process train served by the compressor, and for the entire multi-train plant. Depending on its size, the refinery could have anywhere from 50,000 to 500,000 sensors taking measurements represented in the DT. At the end of the day, digital twins provide the necessary schemas required to easily compare and benchmark like things against one another—helping the user/operator to understand what’s operating well and what’s not.

Three types of digital twins

As the size and complexity of DTs vary, so do functions and lifecycles. We like to think of three types:

• Status twins originate from the earliest design stages of the product cycle, mostly representing consumer products like a connected home or connected car. Data from product lifecycle management (PLM) systems is a major input, and use cases are typically device management, product control, and product quality. Most product twins have short service lives when compared to industrial assets.

• Operational twins enable industrial organizations to improve the operations of their complex plant and equipment and are used to support the work of engineers (process, reliability, etc.) and data scientists doing analytics and lifecycle operations. Operational DTs may inherit data from a status DT. Operational DTs may also include machine learning analytical models. Dan Miklovic at LNS Research calls these “Smart-Connected Asset” DTs. Operational DTs have long lifecycles, and will change over time.

• Simulation twins replicate equipment/device behavior and contain built-in physics models and even process models for what’s connected to the equipment. Simulation-twin use cases include simulations of how equipment performs under varying conditions, training and virtual reality (VR).

Other twins (cognitive twin or autonomous twin) are beginning to receive attention, and tend to be an amalgamation of those listed above

Use cases for the operational twin

Operations teams are looking for ways to improve asset utilization, cut operations and maintenance costs, optimize capital spend and reduce health, safety and environmental incidents. At the heart of every company’s digital transformation is a desire to achieve these objectives through analytical solutions that can augment, and even be proxies for engineers and technicians. But getting there is really hard, whether it’s working to deploy basic analytics like business intelligence, sophisticated machine-learning-driven analytics, or Industrial Internet of Things (IIoT) applications through platforms provided by OEM vendors like GE, Honeywell and ABB.

The biggest challenge is sensor data, which is mostly locked up in process historian systems and stored in a format (typically flat with no context) making analytics next to impossible. This data must be modeled and then kept continuously up to date to reflect the underlying state of affairs with the equipment and its associated asset. An operational DT solves this challenge by enabling the federation of data via the DT’s data model, which is built using metadata associated with the physical equipment.

Once this data model exists, any operations data represented by its metadata in the DT can be shipped to a data lake in an organized way for analytics. The DT’s data model can also be published at the edge to enable shipping data from one system to another, for example from a PI historian to an IIoT application like GE Predix APM. The Operational digital twin also allows for continuous maintenance of the operations data to reflect constantly changing real world conditions.

Five guiding principles

When it comes to building operational DTs that are both effective and sustainable, we have five ideas.

1. Leave no data behind. Data is the foundation for the DT, so bring it all together from the following sources:

• Time series data from data historians, IoT hubs/gateways, and telematics systems;

• Transactional data residing in Enterprise Asset Management, Laboratory Information Management System, Field Service Management System etc.; and

• Static data from spreadsheets, especially those left behind by engineering firms who built the plants, and process hazard data.

Federating as much data as possible via the DT will improve the value of IIoT analytics and applications, and also reveal new and valuable information about the physical twin that was previously unavailable. For example, a DT containing maintenance, equipment, sensor and process hazard data on a critical process can give operators brand new insights on the state of maintenance on critical equipment and how it relates to high risk process safety hazards.

2. Standardize equipment templates across the enterprise. The starting point for building operational DTs is theequipment template which allows for modeling theequipment, its sub-components, associated sensors,sensor attributes, and other related metadata like equipmentfunctional location. Asset operators too often relyon the OEM model, or try to keep the model limitedto only the data streams they presume they need.

Instead, use standard templates by target equipment for every DT. This will allow for easier analytics across all compressors, pumps, motors etc., providing a view of instrumentation coverage across each, and performance benchmarking allowing comparison of different OEMs.

3. Insist on flexibility. Those in industrial companies responsible for delivering operational DTs have a huge challenge. Not only must the equipment and assets be represented hierarchically (compressor motor to compressor) but also across a process. Compounding this challenge, different data off-takers want to consume the data differently, and what’s good for the reliability engineer who wants a hierarchical view of target equipment at one or multiple sites doesn’t work for the process engineer who needs to see the data across multiple units within an overall process. DTs should be flexible enough to handle both hierarchies and process representations of equipment and assets, but be able to be easily managed to meet the need of the data consumer.

4. Integrity is integral. Asset operators deploying analytics and applications will struggle getting their engineers to adopt these new tools if they can’t assure the integrity of the data—especially the sensor data—feeding the tools. Engineers understand the serious drift issues associated with sensor data and need to know they can trust it. Operational DTs should establish and maintain ongoing integrity of time-series data feeding twin, including being able to manage changes to tags feeding the DT.

5. Leverage the cloud. Cloud-enabled data infrastructure provides the scale required to build and maintain operational DTs that can then be published to the edge for streaming analytics. The cloud also allows DTs to be built and shared across the enterprise, and enables analytics to compare processes and performance and share best practices enterprise-wide.

First-generation digital twins

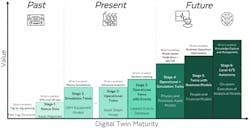

We see DTs evolving in stages (figure), and the good news is that through past investments many companies already have ingredients in place to begin (and many have already started and not realized) their journey.

Many asset operators already have in place status digital twins, which provide a fixed schema of how to view the current readings of a piece of equipment/device. Great examples of status digital twins in the industrial world are asset registries, control systems, process models for simulations, and multivariate process control.

All of these have been around for more than 20 years and require someone to manually create a schema of the physical environment. More often than not, operational data is stuck within a flat reference file where engineers and technicians need to memorize the name of every single instrumentation signal, resorting to sensor-naming conventions to help manage the data. Creating a status DT often required manually mapping every single sensor in the instrument registry to a process drawing, just to build a single process screen. The effort to create a new screen or report could take months of work.

Just to see “the current voltage across all pumps for the past 3 months,” one would need to build a whole new schema and data model mapping all the voltage sensor readings across tens of thousands of pumps. This would take months to build, so most assumed it wasn’t possible. Traditionally, status DTs have taken this approach wherein the use case defines the data model. This approach doesn’t scale because one can’t have a brand new data model for every new question.

What's possible today

Many in the industrial sector have started down the analytics path by pursuing simulation digital twins, which require physics-based models to simulate how equipment operates. Even OEMs have begun selling these physics models as a service. When a physics model for a piece of equipment stands on its own and is not related to any other equipment, this model works incredibly well (e.g. onshore oilfield pumps, windmills, locomotives). For more complex processes and assets—a majority of industrial operations—these models don’t scale well because they’re incredibly hard to create, let alone maintain.

Accordingly, we see a different second stage in the DT evolution as operators move from basic status DTs and into a series of digital transformations of their twins. We’re often asked, “How do I extract greater value from the operational data I’ve gone to great cost to collect and store?” Our answer is to start the journey with an operational digital twin that connects a flexible, community managed data model to high-fidelity, real-time and historical data to support performing general purpose analytics in tools like PowerBI or Tableau. This avoids having to build custom data models for each analytical activity. To deliver a truly capable operational DT it must be a community-managed, flexible data model, allowing anyone (with appropriate permissions) to add their own context.

In the industrial world, the operational DT is the basis of all future work, because it is the data model. A flexible data model, along with some level of data integrity, allows operators to quickly serve data to the appropriate constituencies and applications no matter the analytical use case. All of a sudden, data becomes a massive asset for the organization and begins to take on a gravity of its own.

We’ve also begun to see a new, more advanced operational twin emerge in the market, one that includes events. An event, or labeled time-frame, unlocks machine learning (ML) capabilities. In the ML world, they call labeled data “supervised data” (ML works better with the “right data” not just “more data”). With supervised data, ML algorithms know what to look for, and can now begin to uncover insights around what may be happening during the event, just prior to the event, or even assessing what are the leading indicators of when a future event may occur.

Because the operational digital twin is the foundation of these labeled events, the ML analysis can run across thousands of sensors and over a decade’s worth of data. (Sometimes we’ve seen companies only provide events without the data model behind it—limiting analytical capabilities to just a few sensors.) With the ability to analyze thousands of sensors and decades of data, identifying what is causing an event—or predicting when a future event may occur months ahead of time—actually becomes a reality.

Twins in the future

Once the operational digital twin is well established, the next step is to augment ML capabilities with both process and physics information—what we and others call the simulation digital twin because it simulates operations of a piece of equipment/device through physics and process models.

The best part of having an operational digital twin underlying the physics and process information is that one doesn’t need a fully comprehensive physics model. The physics and process models actually provide the necessary feature engineering required to amplify the results of ML-based analytics; the more data is layered in over time, the better the operational and simulation models become.

Based on business and operational situation, one may choose to develop a simulation digital twin before building an operational digital twin with events. We’ve typically seen better outcomes with operational twins over simulation twins, and operational twins are easier to maintain. That said, the two together are more powerful than each in isolation.

With both operation and simulation digital twins in place, it becomes a lot easier for engineers to improve operations. The next step is to connect those improvements to the income statement and balance sheet. To improve profitability and reduce risk, we need to store the relationship between the digital twin and financial models, people, and even process hazard information.

With this information it becomes easier to use machine learning and analytics to support decisions and improve overall operations. The last stage of DT evolution that we envision is the autonomous digital twin (often called a “cognitive twin”), the overarching twin that features decision-making and control decisions managed by software. We don’t see this happening for several years, but in some circles it has come into vogue, given recent developments in the automotive sector. While a motor vehicle digital twin is much simpler than an industrial digital twin, the Society of Automotive Engineers (SAE) has published a great guide on how to think about the levels of autonomy that will emerge for industrials.

Beyond the autonomous digital twin, we may begin to see them evolve from just supporting decisions to actually making them. This digital twin must be trained in the cloud (where scalable compute is available), but be allowed to execute at the site, or “the edge,” requiring the right hardware to make these low-latency decisions.

Initially, there will still be a human in the loop, or what the SAE calls level 4 autonomy. However, as humans take the appropriate actions around ambiguous events, as more sensing is added, and as the simulation digital twins can cycle through the same scenarios hundreds of thousands of times, at some point we may begin to see full “level 5” autonomy, without humans in the loop.

Behind the byline

CEO Andy Bane, together with Founder and Head of Product Sameer Kalwani and VP Engineering Sean McCormick, form the leadership team of Element Analytics, an industrial analytics software company created to empower organizations to achieve new levels of operational performance. For more information on how the company’s product, the Digital Twin System, facilitates the construction and ongoing maintenance of operational digital twins, visit elementanalytics.com.